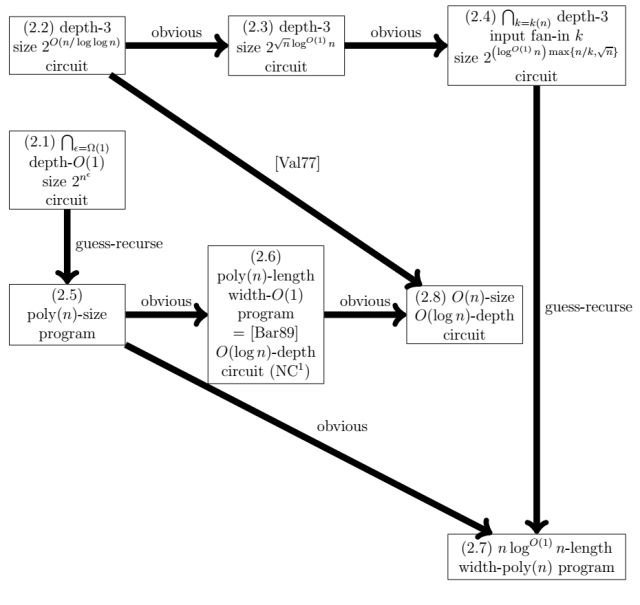

Map 1

Map 2

To understand Life, what should you study?

a. People’s dreams.

b. The AMPK gene of the fruit fly.

Studying restricted computational models corresponds to b. Just like microbes constitute a wealth of open problems whose solutions are sometimes far-reaching, so restricted computational models present a number of challenges whose study is significant. For one example, Valiant’s study of arithmetic lower bounds boosted the study of superconcentrators, an influential type of graphs closely related to expanders.

The maps above, taken from here, include a number of challenges together with their relationships. Arrows go towards special cases (which are presumably easier). As written in the manuscript, my main aim was to put these challenges in perspective, and to present some connections which do not seem widely known. Indeed, one specific reason why I drew the first map was the realization that an open problem that I spent some time working on can actually be solved immediately by combining known results. The problem was to show that multiparty (number-on-forehead) communication lower bounds imply correlation bounds for polynomials over GF(2). The classic work by Hastad and Goldman does show that k-party protocols can simulate polynomials of degree k-1, and so obviously that correlation bounds for k-party protocols imply the same bounds for polynomials of degree k-1. But what I wanted was a connection with worst-case communication lower bounds, to show that correlation bounds for polynomials (survey) are a prerequisite even for that.

As it turns out, and as the arrows from (1.5) to (1.2) in the first map show, this is indeed true when k is polylogarithmic. So, if you have been trying to prove multiparty lower bounds for polylogarithmic k, you may want to try correlation bounds first. (This connection is for proving correlation bounds under some distribution, not necessarily uniform.)

Another reason why I drew the first map was to highlight a certain type of correlation bound (1.3), discussed in this paper with Razborov. It is a favorite example of mine of a seemingly very basic open problem that is, again, a roadblock for much of what we’d like to know. The problem is to obtain correlation bounds against polynomials that are real valued, with the convention that whenever the polynomial does not output a boolean value we count that as a mistake, thus making the goal of proving a lower bound presumably easier. Amazingly, the following is still open:

Prove that the correlation of the parity function on n bits is at most 1/n with any real polynomial of degree log(n).

To be precise, correlation is defined as the probability that the polynomial correctly computes parity, minus 1/2. For example, the constant polynomial 1 has correlation zero with parity — it gets it right half the times. Whereas the polynomial x1+x2+…+xn does a lot worse, it has negative correlation with parity or in fact any boolean function, just because it is unlikely that its output is in {0,1}.

What we do in the paper, in my opinion, is to begin to formalize the intuition that these polynomials cannot do much. We show that the correlation with parity is zero (not very small, but actually zero) as long as the polynomial has degree 0.001 loglog(n). This is different from the more familiar models of polynomials modulo m or sign polynomials, because those can achieve non-zero correlation even with constant degree.

On the other hand, with a simple construction, we can obtain non-zero correlation with polynomials of degree O(sqrt(n)). Note the huge gap with the 0.001 loglog(n) lower bound.

Question: what is the largest degree for which the correlation is zero?

The second map gives another slice of open problems. It highlights how superlinear-length lower bounds for branching programs are necessary for several notorious circuit lower bounds.

A third map was scheduled to include Valiant’s long-standing rigidity question and algebraic lower bounds. In the end it was dropped because it required a lot of definitions while I knew of very few arrows. But one problem that was meant to be there is a special case of the rigidity question from this work with Servedio. The question is basically a variant of the above question of real polynomials, where instead of considering low-degree polynomials we consider sparse polynomials. What may not be immediately evident, although in hindsight it is technically immediate, is that this problem is indeed a special case of the rigidity question. The question is to improve on the rigidity bounds in this special case.

In the paper we prove some variant that does not seem to be known in the rigidity world, but what I want to focus on right now is an application that such bounds would have, if established for the Inner Product function modulo 2 (IP). They would imply that IP cannot be computed by polynomial-size AC0-Parity circuits, i.e., AC0 circuits which take as input a layer of parity gates that’s connected to the input. It seems ridiculous that IP can be computed by such circuits, of course. It is easy to handle Or-And-Parity circuits, but circuits of higher depth have resisted attacks.

The question was reasked by Akavia, Bogdanov, Guo, Kamath, and Rosen.

Cheraghchi, Grigorescu, Juba, Wimmer, and Xie have just obtained some lower bounds for this problem. For And-Or-And-Parity circuits they obtain almost quadratic; the bounds degrade for larger depth but stay polynomial. Their proof of the quadratic lower bound looks nice to me. Their first moves are relatively standard: first they reduce to an approximation question for Or-And-Parity circuits; then they fix half the variables of IP so that IP becomes a parity that is “far” from the parities that are input to the DNF. The more interesting step of the argument, in my opinion, comes at this point. They consider the random variable N that counts the number of And-parity gates that evaluate to one, and they observe that the distribution of several moments of this variable is the same in the case where the parity that comes from IP is zero or one. From this, they use approximation theory to argue about the probability that N will be zero in the two cases. They get that these probabilities are also quite close, as long as the circuit is not too large, which shows that the circuit is not correctly computing IP.